Instagram makes teen accounts private as pressure mounts

Teen accounts will be private by default, and sensitive content will be limited.

Instagram is introducing new safety measures for teen accounts, making them private by default in an effort to protect younger users amid rising concerns about social media's impact on youth. Starting Tuesday, teens under 18 in the U.S., U.K., Canada, and Australia will automatically be placed into more restrictive accounts when they sign up. Existing accounts will be migrated to these settings within the next 60 days, with similar changes coming to the European Union later this year.

Meta acknowledged the potential for teens to misrepresent their age and will now require additional age verification in more situations, such as when a user tries to create a new account with an adult birthdate. The company is also developing technology to detect and adjust accounts where teenagers pretend to be adults, automatically shifting them into the restricted teen settings.

In these new accounts, private messages are limited to only those from users the teen follows or is already connected with. Content deemed “sensitive,” including videos of violence or promotions for cosmetic procedures, will also be restricted. Teens will receive notifications if they use Instagram for more than 60 minutes, and a “sleep mode” will disable notifications and send automatic replies to direct messages from 10 p.m. to 7 a.m.

While these settings apply to all teens, 16- and 17-year-olds will have the option to turn them off, whereas children under 16 will require parental consent to make such changes.

“The three concerns we’re hearing from parents are that their teens are seeing content they don’t want, being contacted by people they don’t know, or spending too much time on the app,” said Naomi Gleit, head of product at Meta. “Teen accounts are focused on addressing those three concerns.”

This announcement comes as Meta faces multiple lawsuits from U.S. states, accusing the company of harming young people and contributing to a youth mental health crisis by designing features on Instagram and Facebook to addict children.

New York Attorney General Letitia James welcomed Meta’s move, calling it “an important first step,” but stressed more needs to be done to protect children from the dangers of social media. Her office is working with other New York officials to implement a new state law aimed at limiting children’s access to what critics describe as addictive social media feeds.

Expanding parental controls

Meta’s previous attempts to enhance teen safety and mental health have faced criticism for not going far enough. For instance, while teens will receive a notification after 60 minutes of app use, they can easily ignore it and keep scrolling, unless a parent has activated the “parental supervision” feature, which allows parents to limit their child’s time on Instagram.

Under the new changes, Meta is giving parents more control over their children’s accounts. Teens under 16 will need a parent or guardian’s permission to change settings to less restrictive options. Parents can set up “parental supervision” to oversee these changes and connect their child’s account to their own.

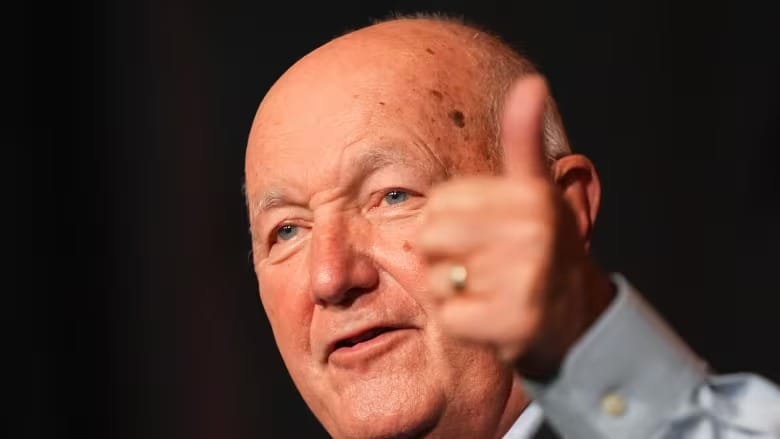

Nick Clegg, Meta’s president of global affairs, recently noted that parents have not widely adopted the parental controls introduced in recent years. Gleit believes the new teen account settings will encourage more parents to take advantage of these tools.

Through the family center, parents will be able to monitor who is messaging their teen and hopefully engage in conversations about any issues, such as bullying or harassment. Parents will also be able to see who their teen is following, who follows them, and who they’ve messaged in the past week, providing greater visibility into their child’s online interactions.

U.S. Surgeon General Vivek Murthy has previously criticized tech companies for placing too much responsibility on parents to ensure their children’s safety online. He highlighted the challenge of asking parents to manage rapidly evolving technologies that fundamentally change how young people perceive themselves, build relationships, and experience the world—technology that previous generations did not have to deal with.